In the past few years, technological advancements and increased computational power have led to an uptake in Artificial Intelligence (AI)1 adoption by financial firms, central banks and financial sector regulatory agencies. Artificial intelligence is undergoing a profound transformation—from analytical models designed for specific tasks to generative systems capable of producing human-like content and engaging in complex decision-making. This shift marks a new era where technology is no longer just a passive tool but an active participant in shaping strategy, leadership, and operations. AI agents are increasingly being deployed across industries, including financial institutions and central banks, to create “cognitive enterprises” that leverage AI’s ability to amplify human cognition and automate a wide range of tasks.

The purpose of this blog is to explore the adoption of AI in SEACEN’s member central banks (MCBs) and explore the risks to financial stability, and how central banks and regulators should respond.

Opportunities for Central Banks

For central banks, AI presents both immense opportunities and significant risks. On one hand, AI can enhance productivity, improve forecasting, and support monetary policy implementation. AI offers benefits such as increased operational efficiency, regulatory compliance, financial product customization and advanced analytics. With the advent of generative AI (GenAI) and large language models (LLMs), the range of use cases has become more diverse.

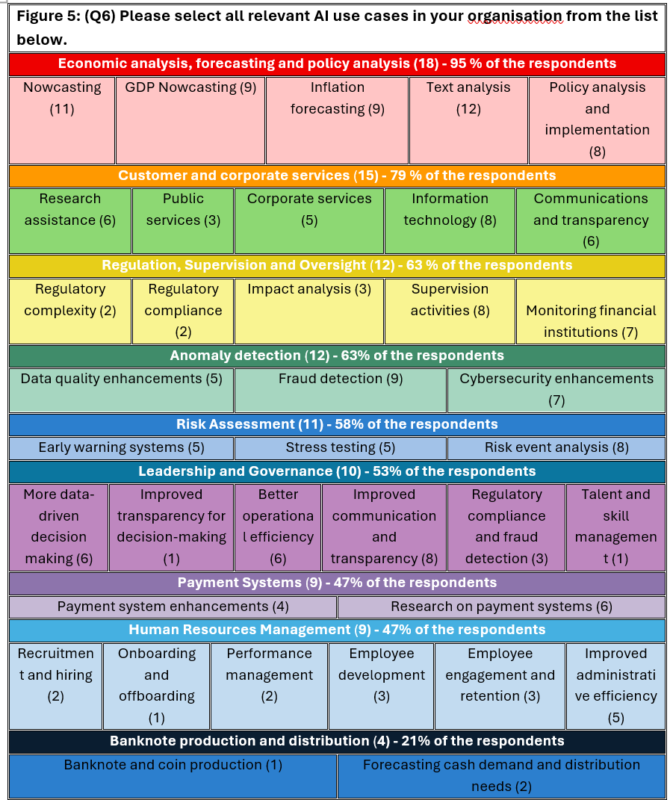

Based on a survey conducted by the SEACEN Centre, member central banks (MCBs) and stakeholders appear to be rapidly embracing innovative data science techniques in their activities, including for economic research and statistics2. The survey findings reveal that AI adoption is progressing in central banks, with 89% of respondents already using AI tools, while only 11% report no current use. AI is most widely employed in economic analysis and forecasting (95%), followed by customer and corporate services (79%). Significant uptake is also observed in anomaly detection and regulation/supervision (63%), risk assessment (58%), and payment systems (47%). AI also serves as a catalyst for broader innovation in other central banking functions. The chart below shows relevant use cases in MCBs.

AI is becoming strategically important for MCBs as they seek to improve operational efficiency and innovation, allowing staff to focus on higher-value activities/tasks, decision-making, and financial stability monitoring in central banking. It fosters the development of IT and data science skills, improving financial data analysis, and risk detection. It also enables automation of routine banking tasks and the use of synthetic data to enhance operations. In some MCBs, it acts as a knowledge assistant to improve quality of outputs and provide summarization of research, meeting minutes, work planning, etc. and aiding policymaking for better decision quality.

What are the risks to the Adoption of AI?

While AI offers benefits such as operational efficiency, regulatory compliance, and advanced analytics, it also introduces significant risks to financial stability. The Financial Stability Board (FSB) highlights vulnerabilities that could amplify systemic risk, including heavy reliance on third-party providers, market correlations, cyber threats, and model risk tied to poor data quality and governance. Generative AI (GenAI) adds further challenges, enabling financial fraud and disinformation. Misaligned AI systems operating outside legal and ethical boundaries can harm financial markets, while long-term adoption may reshape market structures, macroeconomic conditions, and energy consumption. The integration of AI into central banking operations demands a reassessment of risk frameworks and the embedding of robust cybersecurity, governance, and risk management protocols throughout the AI lifecycle – from design to deployment – to mitigate the evolving threats.

One of the most pressing concerns for SEACEN’s MCBs relating to the adoption of AI is the evolving cybersecurity landscape. Central banks face heightened exposure due to the sensitivity of their data and critical market role. Based on our survey, institutions identified key risks as: information security (95%), third-party dependency (84%), model opacity (79%), operational vulnerabilities (68%), reputational damage (53%), and talent shortages (53%). Privacy concerns, especially regarding third-party data handling, remain prominent on the minds of MCBs.

Challenges to AI Adoption in Central Banking

AI promises efficiency gains, advanced analytics, and improved policymaking for central banks, but AI adoption faces significant internal and external challenges. Internally, outdated IT systems, siloed data, and limited digital readiness hinder integration. Organizational barriers such as fragmented governance, cultural resistance, and lack of strategic alignment slow progress. Talent shortages in AI and data science, combined with fears of workforce displacement and erosion of analytical skills, add complexity.

Externally, regulatory uncertainty, strict data protection laws, and cybersecurity risks pose major hurdles. Cloud-based solutions introduce vulnerabilities, while reliance on third-party vendors creates systemic risks. AI development—especially foundation models—requires vast computing power and data resources and given that there are only a handful of service providers this creates a highly concentrated market space. This dependency heightens fragility; disruptions in cloud services, chips, or data aggregation could cascade across financial institutions. Market concentration also risks correlated behaviors which could amplify shocks.

Generative AI compounds these issues with unpredictability, model drift, and opacity, making oversight difficult. Risks include overfitting, data poisoning, and “hallucinations,” while limited explainability undermines compliance. Budget constraints, reputational risks, and ethical concerns like bias further complicate adoption.

To mitigate these challenges, central banks must invest in governance, risk management, and capacity building. Careful planning and tailored regulatory frameworks are essential to harness AI responsibly without compromising financial stability.

Conclusion

AI offers central banks efficiency gains and advanced analytics but introduces systemic risks. Heavy reliance on third-party providers and market concentration creates fragility; disruptions in cloud services or data aggregation could cascade across institutions. Generative AI compounds challenges with unpredictability, model drift, and opacity, increasing risks of fraud, misinformation, and compliance failures. Cybersecurity threats and privacy concerns remain critical, alongside talent shortages and ethical issues like bias. Robust governance, risk management, and tailored regulatory frameworks are essential to mitigate these vulnerabilities. Careful planning and oversight will enable central banks to harness AI’s benefits without compromising financial stability.

- For the purposes of this blog “Artificial Intelligence” is defined in a deliberately broad and inclusive way. It encompasses not only in the adoption of large language models (LLMs), but also a broad spectrum of algorithmic and data-driven approaches, ranging from machine learning techniques (such as decision trees, clustering, and support vector machines), deep learning, generative AI to rule-based expert systems, natural language processing (NLP), and predictive analytics, and generative pre-trained transformers (GPT). ↩︎

- The Southeast Asian Central Bank (SEACEN) Centre surveyed 33-member, associate and observer central banks and monetary authorities to understand the adoption of AI in central banking. The aim of SEACEN’s “Artificial Intelligence in Central Banking Survey” was to collect information from member central banks and monetary authorities (MCBs) and other key stakeholders to assess (a) the extent of AI adoption, current use cases and future plans, (b) the motivations, risks and challenges in using AI, (c) governance of AI across Asia‑Pacific central banks. Even pilot projects, exploratory initiatives, or small-scale departmental applications that utilise such methods were within the scope of the survey. Nineteen institutions responded, comprising 13 full member central banks (MCBs) and six associate or observer members, representing a 58% overall response rate and a 68% response rate among full members. ↩︎

Mark is a Senior Financial Sector Specialist in the Financial Stability, Supervision and Payments pillar at the SEACEN Centre.